AWS EKS - Cluster provisioning

This example demonstrates provisioning a Elastic Kubernetes Service (EKS) cluster on the AWS cloud. The deployment consists of:

- AWS EKS cluster

- Security Group

- Network

- All of the essential peripherals in AWS (IP address, NIC, etc…).

In this example we will deploy only the cluster. Later, in the more advanced examples (multi cloud examples) we will leverage this setup as the basis for deploying a containerized service.

Prerequisites

This example expects the following prerequisites:

- A Cloudify Manager installed and accessible.

- This can be either a Cloudify Hosted service trial account, a Cloudify Premium Manager, or a Cloudify Community Manager.

- Access to AWS infrastructure is required to demonstrate this example.

Cloudify CLI or Cloudify Management Console?

Cloudify allows for multiple user interfaces. Some users find the Cloudify Management Console (web based UI) more intuitive while others prefer the Cloudify CLI (Command Line Interface). This tutorial and all following ones will describe both methods.

Community version - Some of the options described in the guide are not available in the community version management console (web UI). An example would be setting up secrets. You can still perform all of the functionality using the Cloudify CLI.

Cloudify Management Console

This section explains how to run the above described steps using the Cloudify Management Console. The Cloudify Management Console and Cloudify CLI can be used interchangeably for all Cloudify activities.

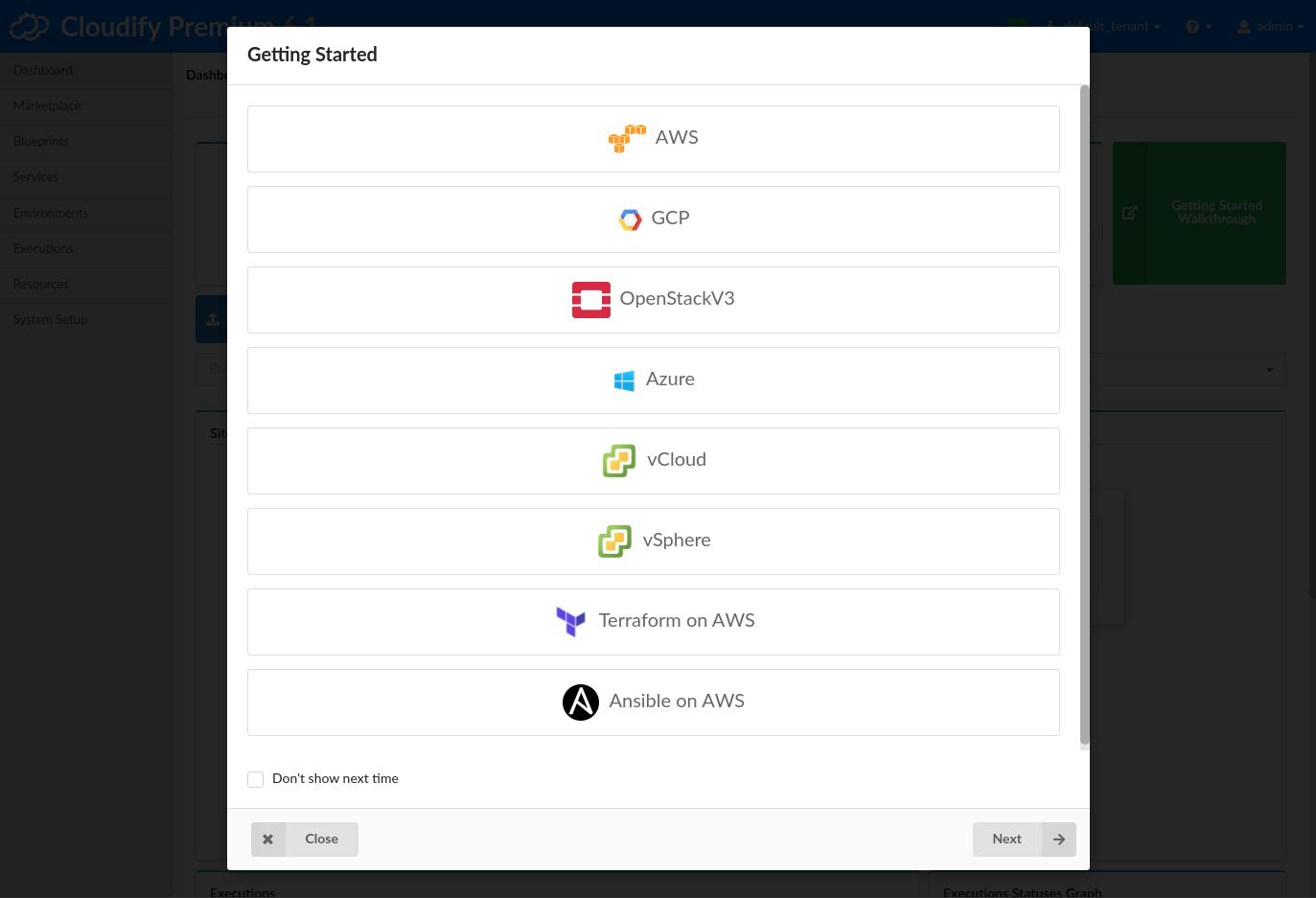

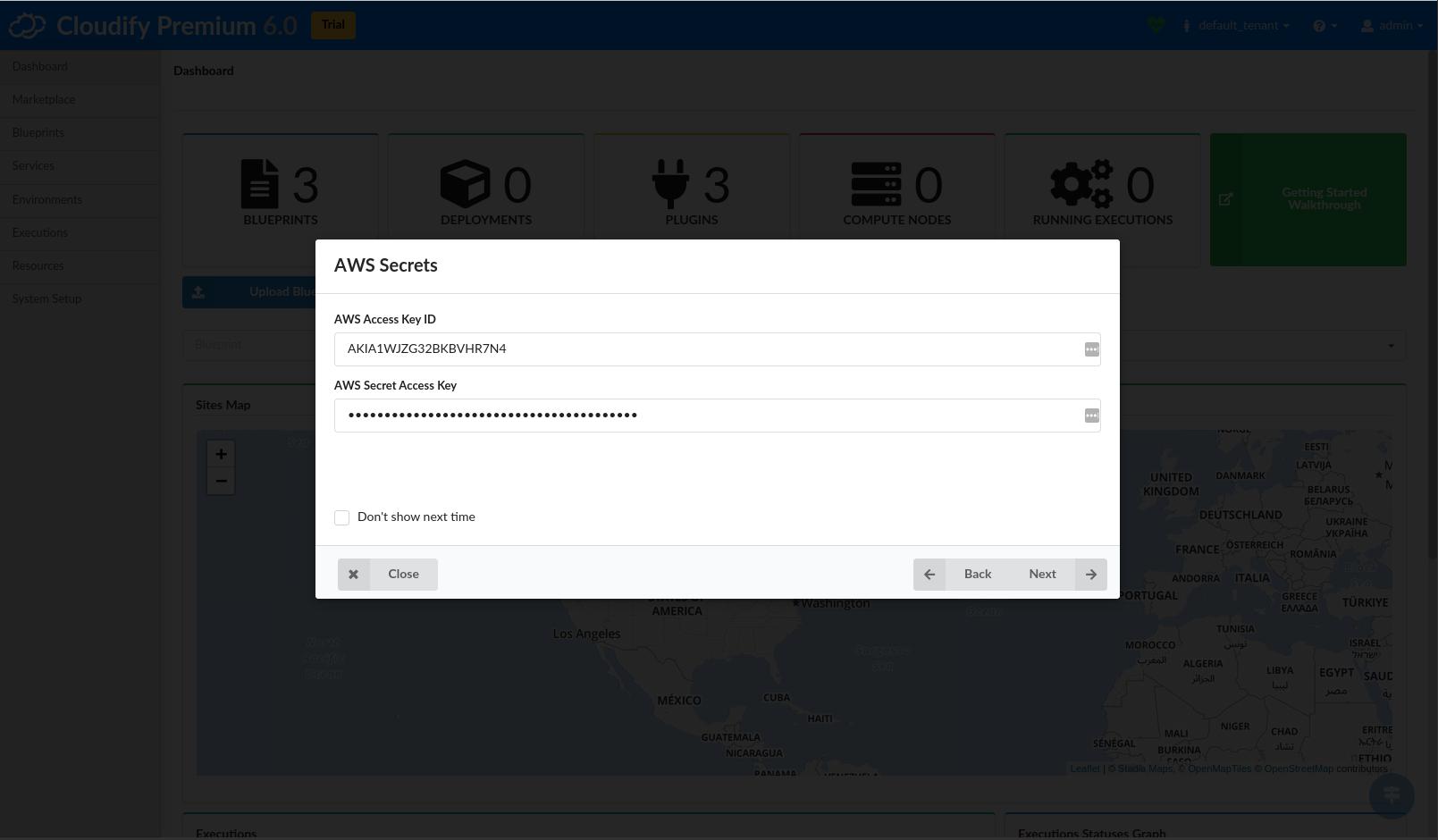

Import Plugins and Secrets

To connect to AWS, credentials and Cloudify plugins are required. Cloudify recommends storing such sensitive information in a Cloudify secret. Secrets are kept encrypted in a secure way and used in run-time by the system. Learn more about Cloudify secrets here.

Cloudify version 6+ offers a fast-track process to import both credentials and all necessary plugins. As soon as the Cloudify Management Console loads, it will present several “getting started” options. Choose the AWS option and enter the requested credentials to automatically import the required secrets and plugins.

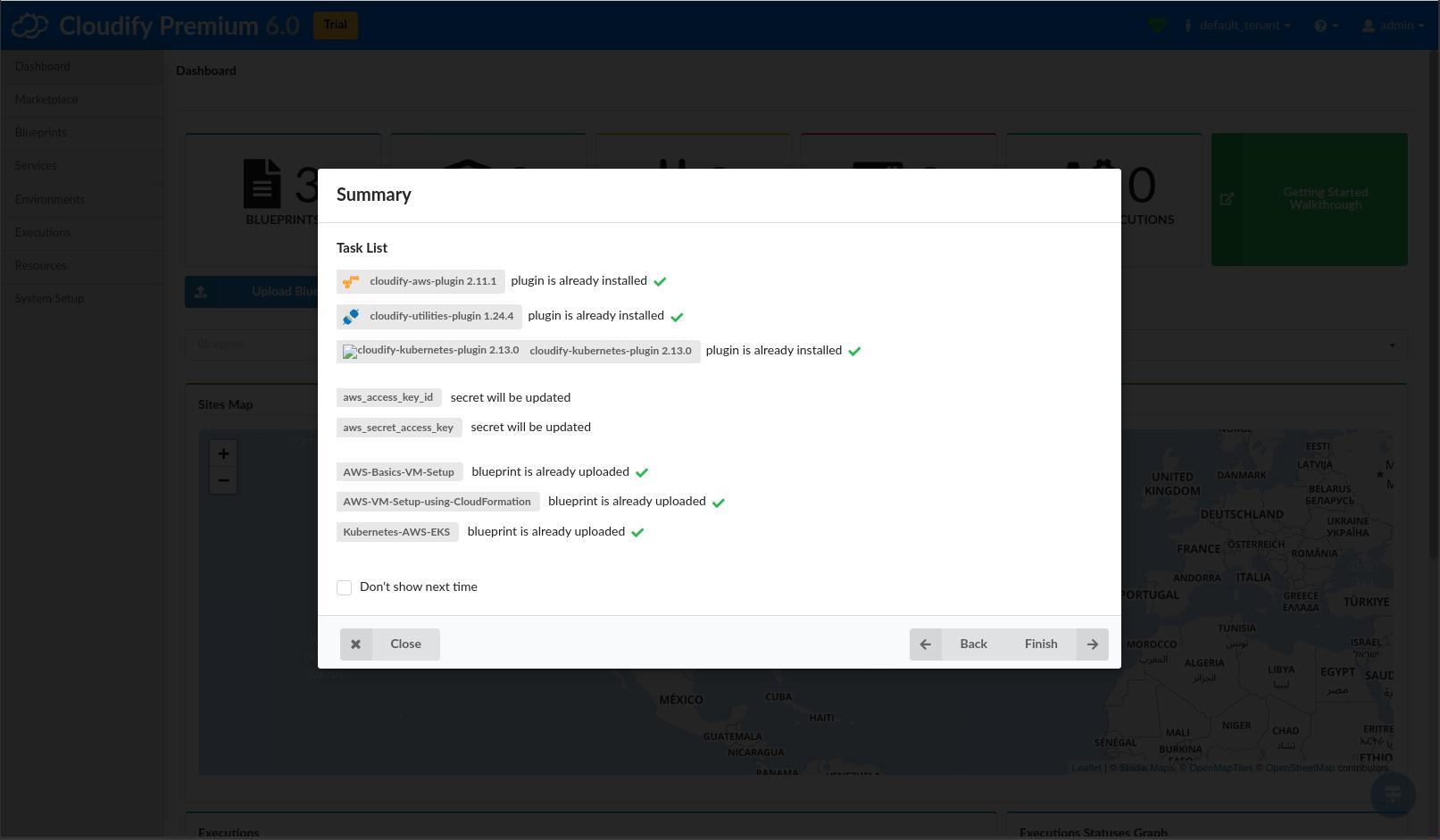

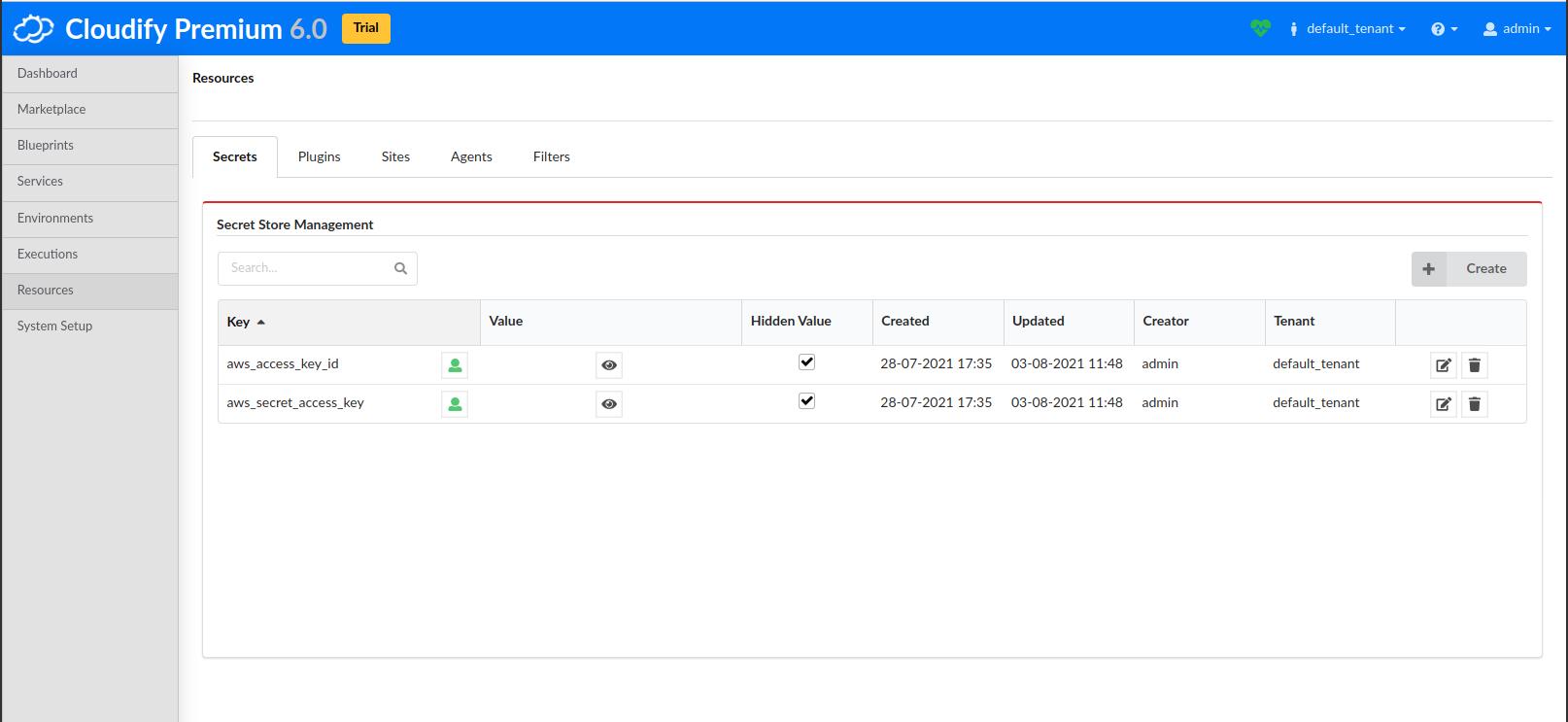

Validate Secrets

To view the imported secrets in the Cloudify Manager, login to the Cloudify Management Console and select the Resources page and navigate to the Secrets tab. The following secrets should exist after following the above steps:

- aws_access_key_id

- aws_secret_access_key

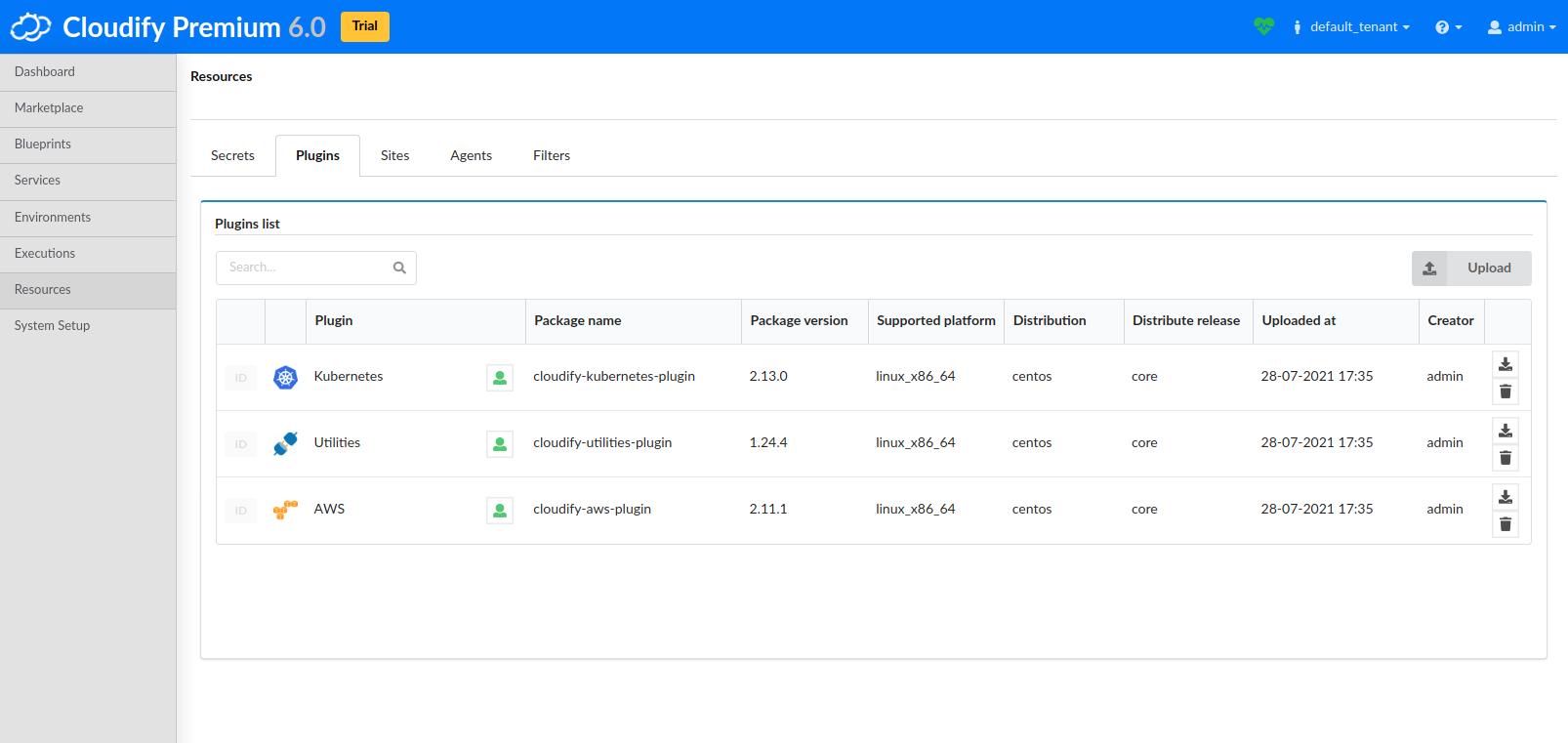

Validate Plugins

To view the imported plugins in the Cloudify Manager, login to the Cloudify Management Console and select the Resources page and navigate to the Plugins tab. The following plugins should exist after following the above steps:

- AWS

- Kubernetes

- Utilities

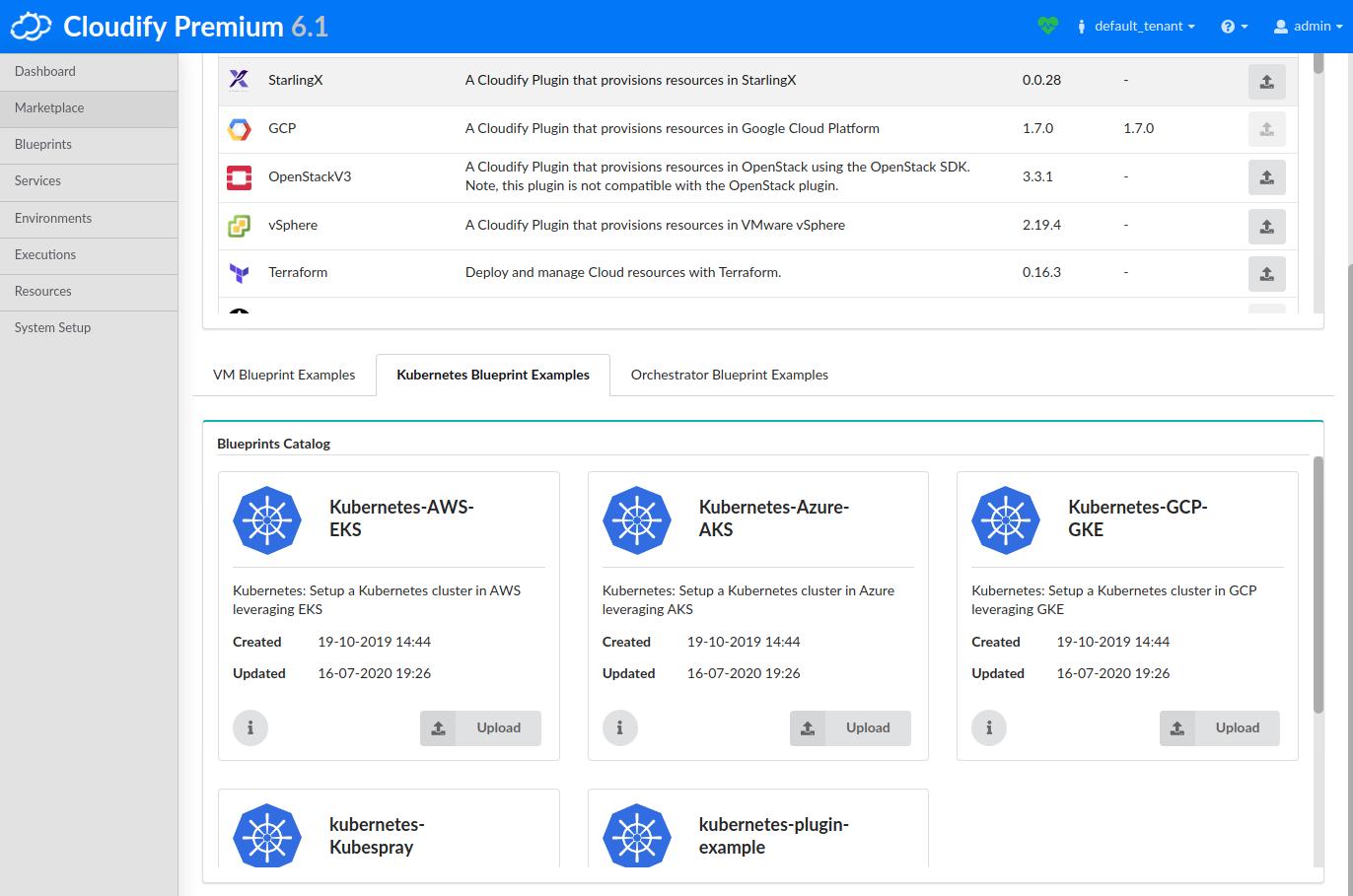

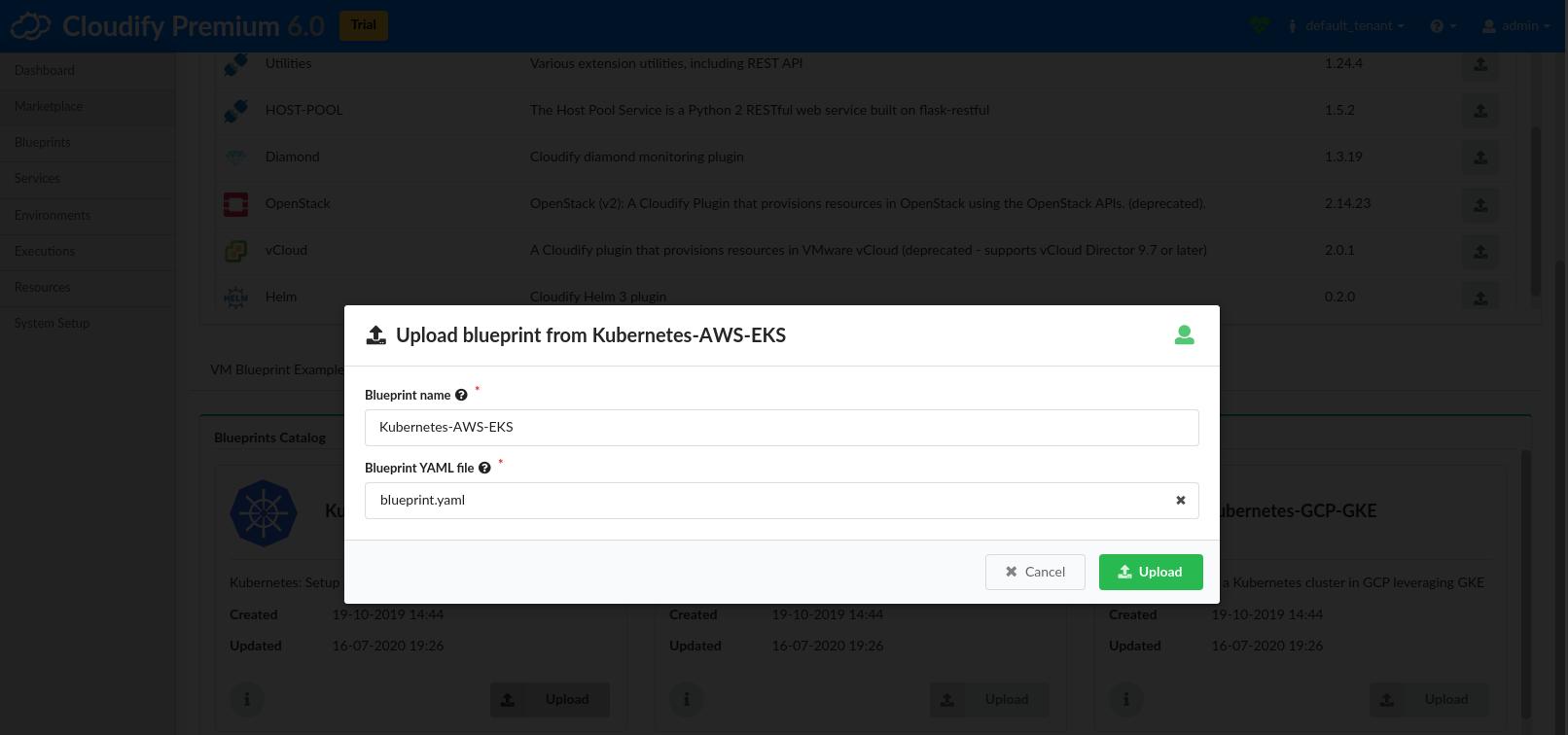

Import Kubernetes Blueprint

The Cloudify Manager provides an easy method of provisioning a Kuberenetes cluster on AWS EKS. On the Marketplace page, navigate to the Kubernetes Blueprint Examples tab and upload the Kubernetes-AWS-EKS blueprint.

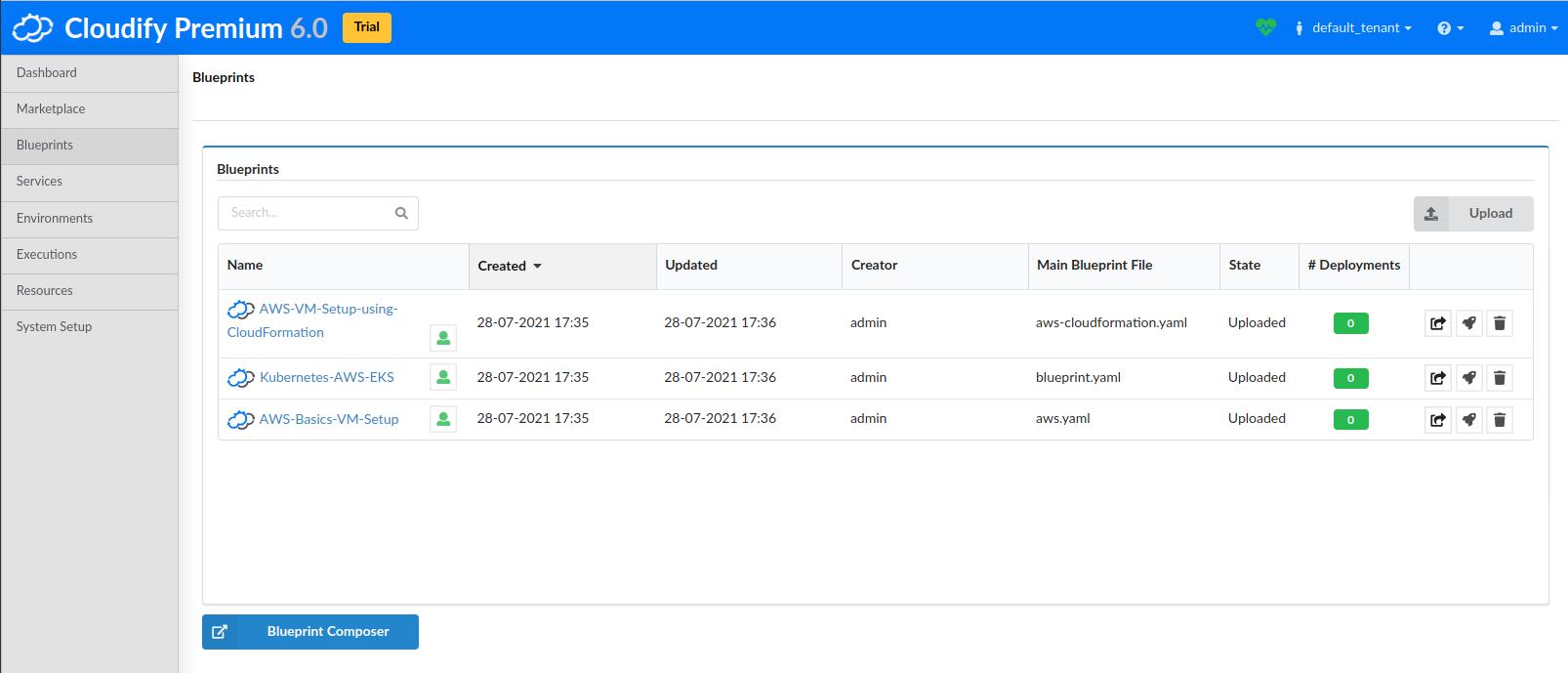

Once imported, you can find the resulting Kubernetes-AWS-EKS blueprint by clicking on the Blueprints page.

Deploy an AWS EKS Cluster

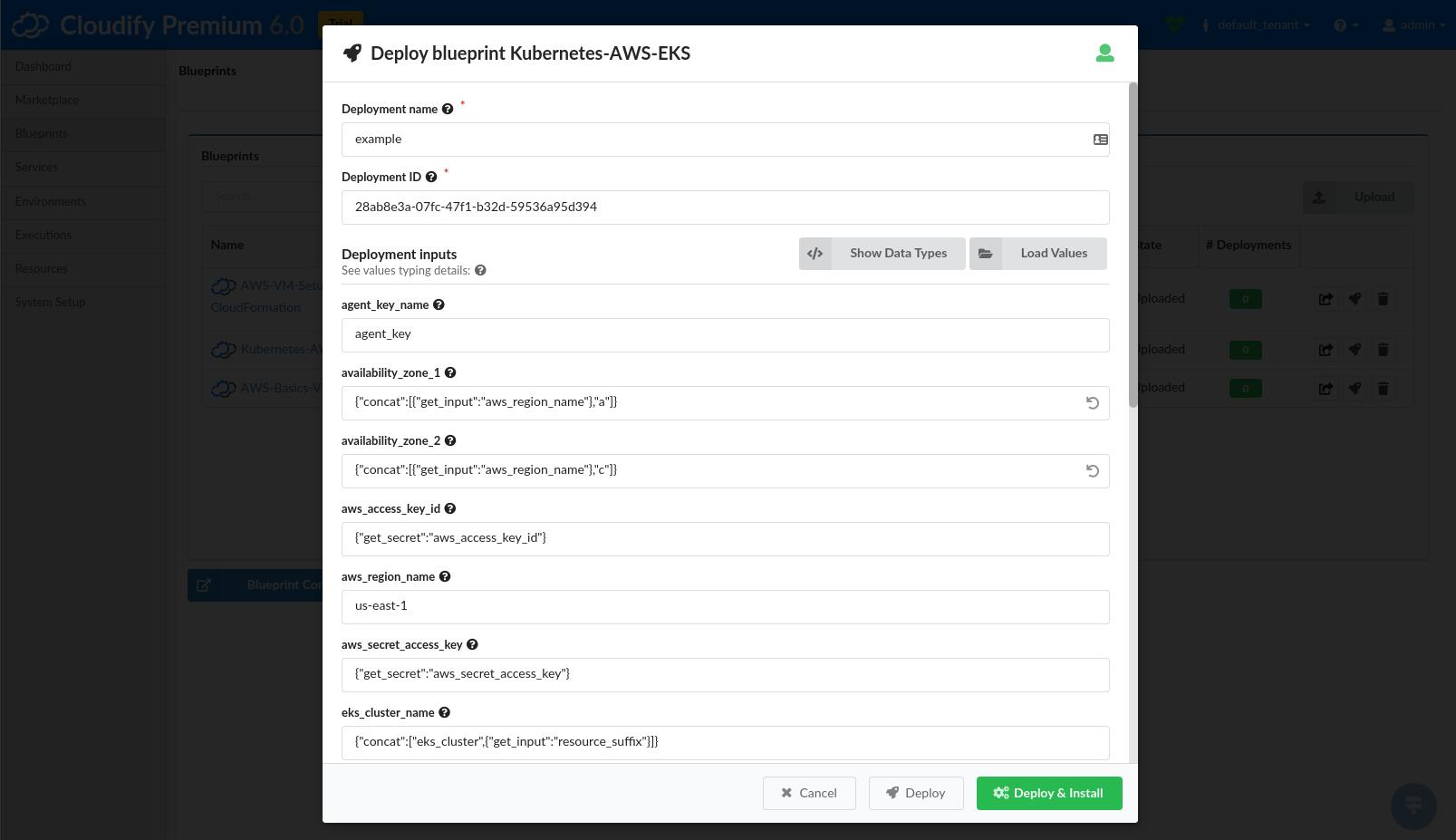

On the Blueprints page, click the Create deployment button for the Kubernetes-AWS-EKS blueprint.

- Create a Deployment name.

- Adjust any of the Region and Availability zone inputs to match your preferences.

Click the Deploy & Install button at the bottom of the form to start the deployment. On the following page, click the Execute button.

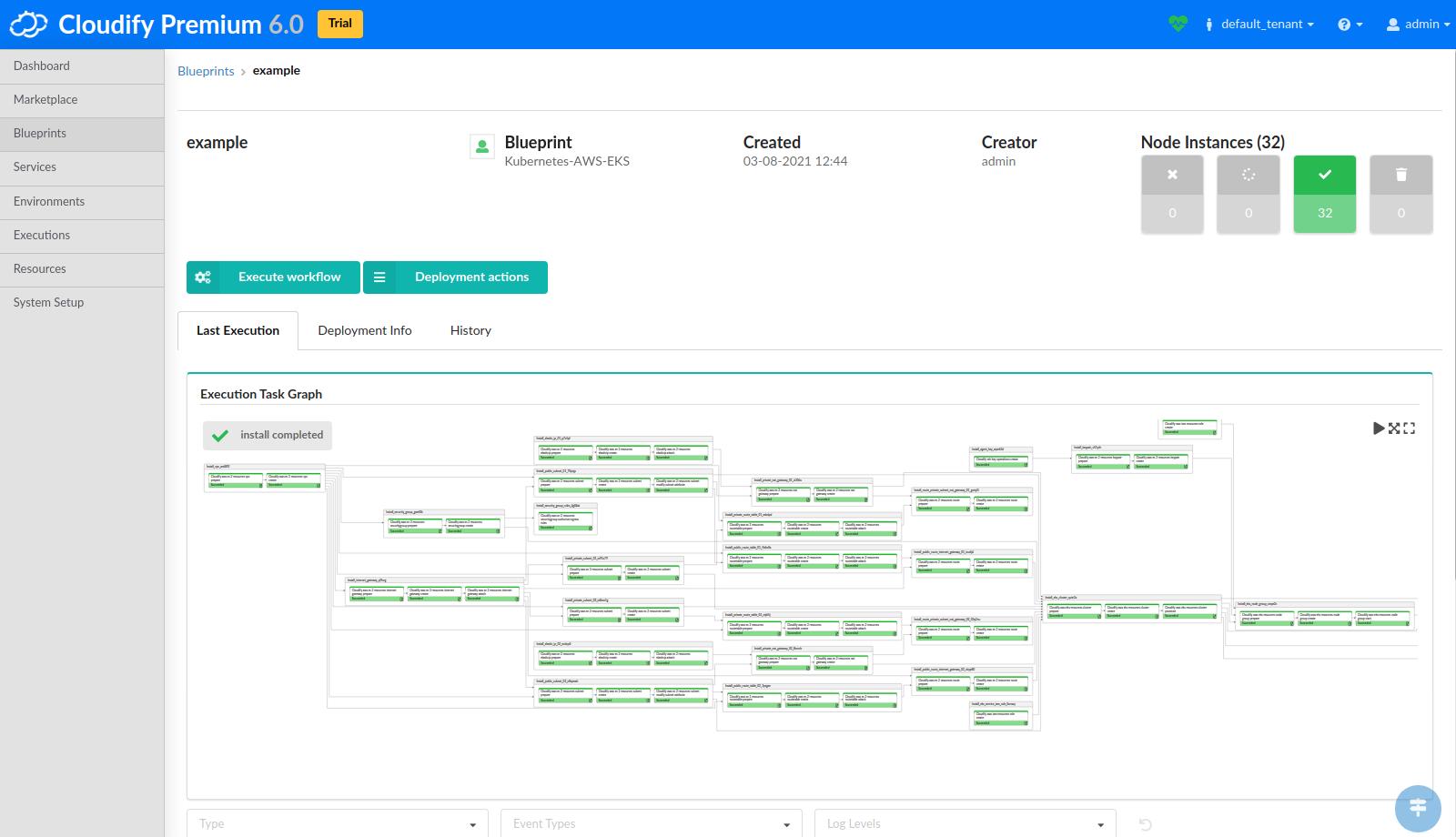

You now have a Cloudify Deployment running the default install workflow. Cloudify will begin actively interfacing with AWS to deploy a AWS EKS Kubernetes cluster. You can track the status of the Deployment in the Execution Task Graph panel in the Deployments page.

Cloudify CLI

.. todo

Using the AWS EKS Cluster

Install CLI tools

Kubectl

AWS documentation: https://docs.aws.amazon.com/eks/latest/userguide/install-kubectl.html

AWS CLI

AWS documentation: https://docs.aws.amazon.com/cli/latest/userguide/install-cliv1.html

For Linux users, we recommend the quick install method using Python3 and PIP.

python3 -m venv venv

source venv/bin/activate

pip3 install awscli

# Check the AWS CLI version

aws --version

# Configure the CLI (enter your access credentials here)

aws configure

# Confirm everything is working

aws sts get-caller-identity

# Create a Kubectl config

# replace "your_cluster_name" and "your_region" with the values you

# used in your Deployment earlier.

aws eks --region your_region update-kubeconfig --name your_cluster_nameVerify access

# List version

kubectl version --kubeconfig ~/.kube/config

# List namespaces

kubectl get ns --kubeconfig ~/.kube/config

# List nodes

kubectl get nodes --kubeconfig ~/.kube/config